NuttX is a real-time operating system (RTOS) gaining traction in the robotics world. One of the big NuttX news this year was the landing of the Sora-Q (Lev-2) robot on the moon, which makes NuttX the first open-source RTOS to do so. And Sora-Q runs on a Sony Spresense computer, so it is of little surprise the 2024 annual NuttX event has been hosted by Sony in Tokyo. A great opportunity for Artefacts to report on software practices for robots with RTOS.

The two-day event was dense, with 30 speakers covering many aspects of the NuttX features and ecosystem, from new chip support, to chipsets like DMA2D, graphics with LVGL, a new build system with CMake, working with Rust, to applications at home and with drones. One suprising fact was the amount of open-source contribution from a single company: Xiamo has recently reached 51% code shared with the NuttX community, with many excellent presentations all along the event. All talks are available on YouTube.

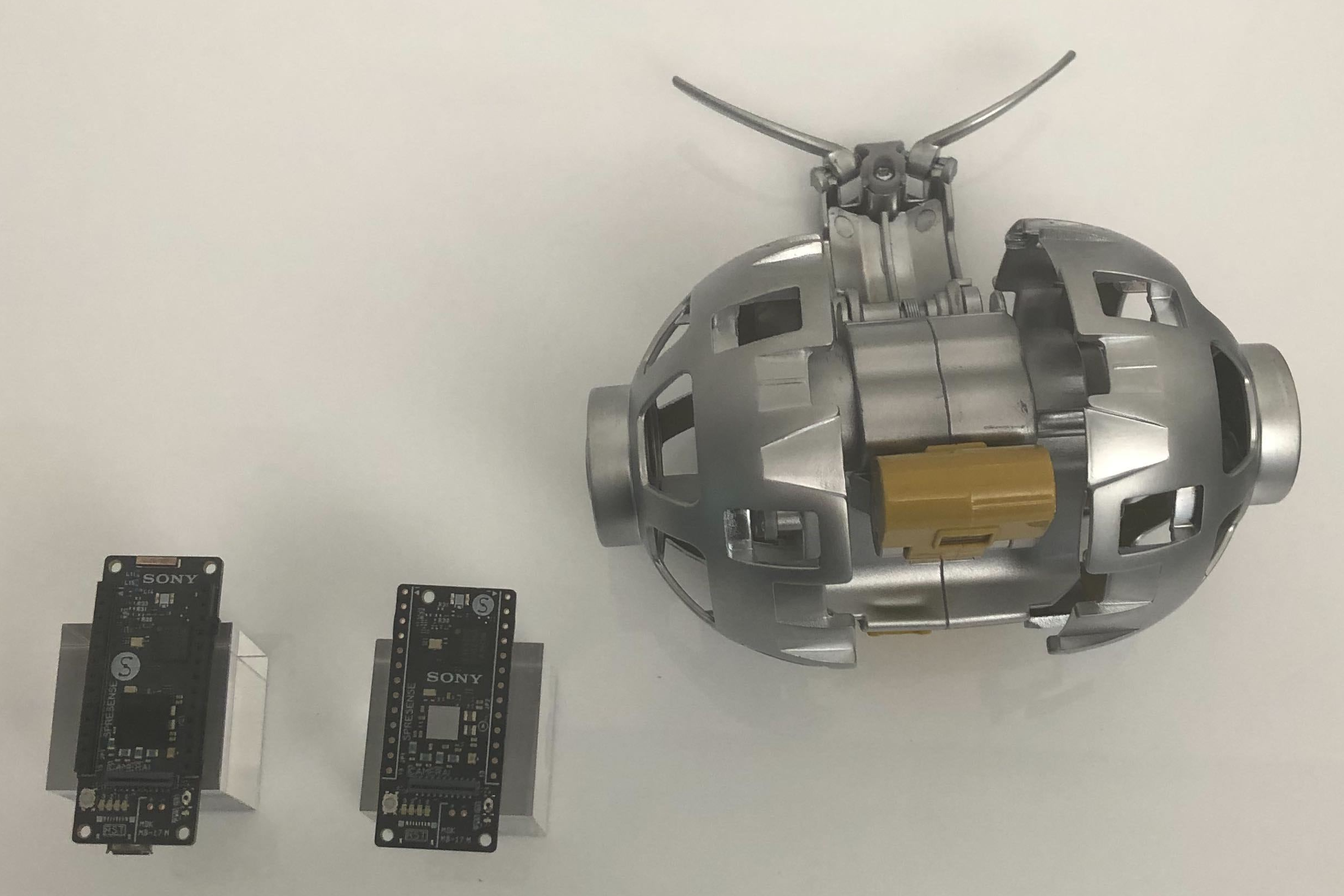

Robot applications of NuttX peppered the event, with a permanent exposition of Sony's work with Spresense, notably the Sora-Q moon rover and upcoming article on high-precision inertia measurements by using 16 cheap IMU chips, instead of expensive alternatives. Sony had unfortunately no news beyond the public information on Sora-Q---no new images from the mission. The toy version co-developed with Tomy is apparently out of stock, with no further information on its availability---and it does not run on Spresense.

A few Sony demos of Spresense's advanced capabilities showed good performance, most impressively on a very small power budget. Applications at the event ranged from real-time classification from 1 to 15 FPS, a remote control based on movements, sound playing and generation. Most of these applications were demoed in the talk by Takayoshi Koizumi, lead in the Spresense platform.

Aside the commitment of Xiamo to OSS in NuttX, they demoed many of their devices' capabilities, from sports band to smartwatch to smartphone and tablets. Their work on graphics for portable devices is rich and inclusive, supporting the integration with the popular LVGL library as well. In terms of robotics, Xiamo referred to they "CyberDog" project without any detail at the event, but its planned availability is for 2025.

Two more applications of NuttX related to robotics presented a neat project perhaps more akin to automation, and work on drones. Tomasz "CeDeROM" Cedro described how NuttX helped make uplifiting an old garage crane system easy and reliable. Dobrea Dan-Marius showed how using NuttX with PX4 could help make more practical applications of UAVs.

Lup Yuen Lee, Marco Aurelio Casaroli and Masayuki Ishikawa presented each different aspects of running NuttX on simulation and hardware emulation, including QEMU with or without hypervisors, Renode and TinyEMU. They all remind of the "sim2real" problem---the simulation to reality gap we work on at Artefacts---yet show progress for simulations with higher fidelity, depth, performance and application. An example that resonates with Artefacts is the application of TinyEMU to continuous integration (CI), by using GitHub Action hooks to run NuttX test suites in simulation, for early alerting on potential bugs and regressions. CI is a powerful tool to detect a whole range of problems---not all of them, so the "sim2real" gap---early and conveniently for software teams.

An interesting discussion at the event pertained to how CI with TinyEMU-based simulations should be used: Most agree that it should not be run for all software contributions (each commit), but rather on a weekly basis for deep analysis, and still relatively early problem detection. This positioning of the NuttX community toward CI differs from many other OSS projects, but most others do not need simulation stages. At Artefacts, simulations are part of all CI features and run by default on all commits. We are certainly going to watch the NuttX experience with simulation-based CI over time, and learn from their practices!

At time of this post, Artefacts works essentially for ROS-based projects. We are considering supporting directly other frameworks used in robotics, including lower-level software like NuttX. Our software stack has been designed from the outset to be independent from frameworks and build targets, and the popularity of ROS in more than 180 interviews led us to build ROS adapters very early. Supporting another way of building robots like NuttX, and at the same time working with an RTOS is a exciting challenge ahead if we take this option. Please do get in touch if you work at the intersection of robotics and NuttX! We wish to talk to you and support your endeavours with new generations of software tools for making robots!

Extra Links: