The Artefacts team joined the Food Topping Challenge organised at ICRA 2024 by RT Corporation.

The challenge was to automate placing karaage (Japanese fried chicken) into bento boxes using a robotic manipulator. We used RT’s Foodly robot, the main hardware used in the competition, while some teams used other models such as Kawada Robotics’ NEXTAGE.

Our main approach—one could retrospectively say handicap compared with the other teams—was to mostly develop in simulation, and only work with real hardware for tuning at “delivery time”, a few days before the challenge. Our goal was to evaluate how well simulation-driven development and “sim to real” practices could do on a challenge, and how much our robotics tooling would contribute to our performance.

Simulation-Driven Development with RoboStage and Artefacts

The first step in simulation-driven development is to build a virtual environment, often a deterrent to robotics teams as it requires unrelated knowledge, skills and time. We have been building Robostage to support this incremental and demanding task.

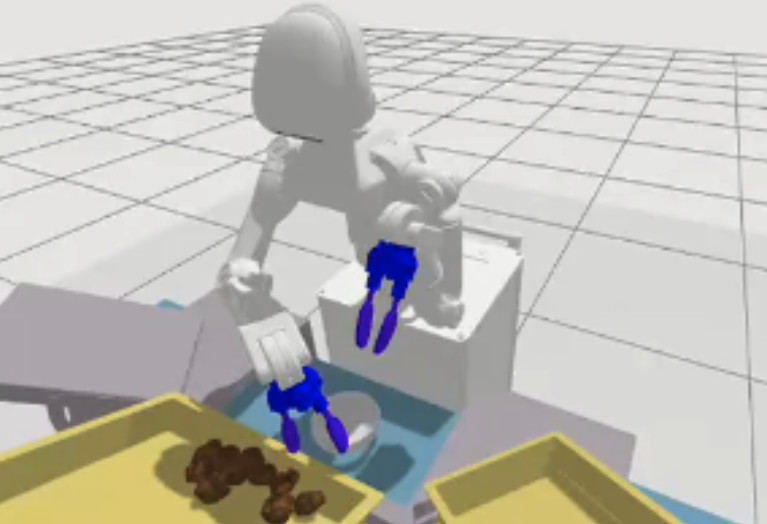

We started with generating the simplest relevant environment for the challenge, and gradually increased fidelity as the event organisers shared more concrete information about the challenge setup. The first picture below shows one of our early generated setups:

The simulation already contains Foodly, a karaage tray, a conveyor belt and boxes ready to be filled. At this stage, our team could already develop the manipulation logic using different approaches easily, like manual operations, replays, and the MoveIt! library. Environments generated by RoboStage can also be run on our Artefacts platform to automatically execute a batch of tests, and generate reports much like continuous integration for robotics.

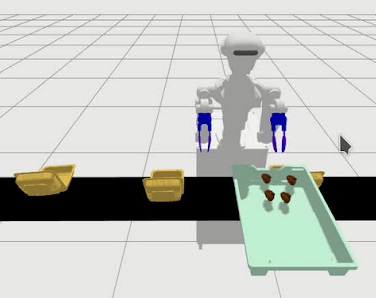

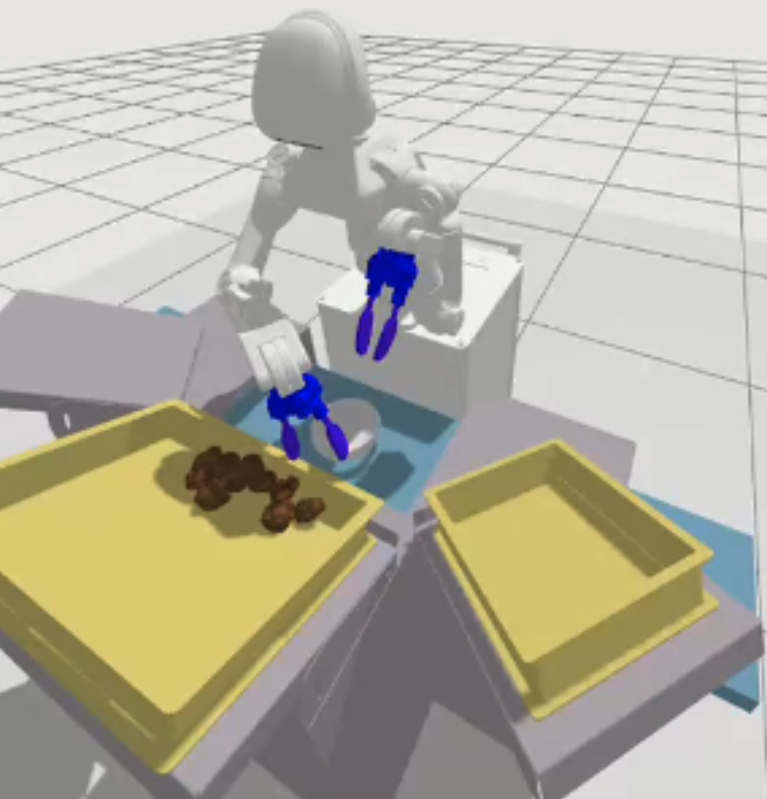

RoboStage and Artefacts promote iterative development, making it easy to refine the target environment. The next picture shows the final settings after a few iterations, with a table model provided by the event organisers, custom bowls based on the challenge model, as well as food trays and a conveyor belt.

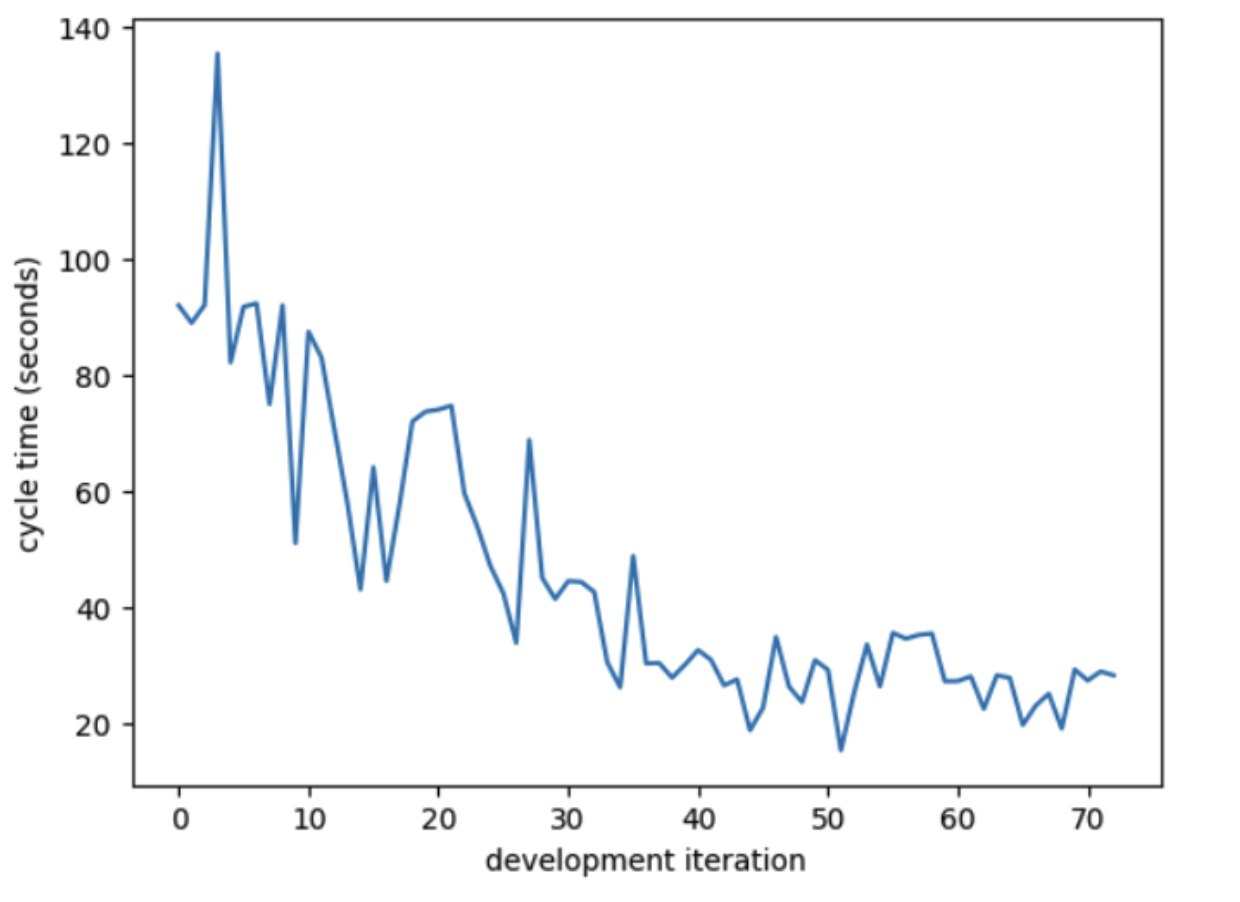

We could track our progress over iterations using the Artefacts platform, showing whether our manipulation logic was succeeding, and how quickly. The cycle time in the image below is generated by Artefacts to measure how long it takes the robot to put 2 karaages in a box.

Simulation-driven development allowed us to develop a complete solution without the hardware, leveraging RoboStage and Artefacts to iterate quickly over ideas and alternatives (in this case: different planning libraries, different vision technologies including machine learning, different ROS version).

From Simulation to Target Hardware

Everything was going mostly well until the setup at the event. Our work hit the well-known problem of the “sim to real gap”.

Hardware Resources and Distributed Compute

Our main issue was lack of compute on the target hardware. Our manipulation controller was demanding in GPU resources (recent ML-based vision algorithm), available to us in development and simulation, but not on the robot embedded computer. We prepared for this situation by swapping the robot computer with our own including sufficient and tested GPU resources.

Unfortunately camera drivers failed to work on our machine (despite the same operating system and ROS settings). We split ROS nodes, keeping the camera software on the original machine, and offloading GPU-based processing to the other one. At first images were not reaching the GPU node, but after a few ethernet cable replacements and another machine swap later on, the whole system started to work fully, albeit slower than what had been measured so far. This adaptation consumed a day of work, and we only had one day left before the challenge started.

More on what moved to the GPU machine: We moved our vision layer over to a node running on the extra GPU machine. This node became a service the manipulation controller could call as required on demand. Up until now vision was running constantly in parallel with the controller which we found was leading to timing mismatches. This was in tandem with ROS and MoveIt! updates on the robot computer in an attempt to resolve a warning that stale data was being received on the /tf topic.

Distributed compute load and library updates unlocked the situation once. Yet the robot stopped each run after its first task (pick). We estimated a task completion acknowledgement was missing–possibly lost on the network of the distributed system–blocking the controller from moving onto the next task. Without the time (unfortunately) to find the root cause, we rewrote the control logic to be handled asynchronously, and the robot managed to run through its task list.

From Virtual to Real Components

The robot was successfully completing the scenario in simulation, but the karaage pose estimation seemed wrong. Was it a lighting issue? A problem with camera calibration? We finally identified the root cause: It was a simple yet fatal difference in data format between the virtual and real sensor configurations. An easy fix, yet a reminder of how sensitive robotics development is to proper hardware settings.

A last observation came in the next run, this time (oddly) only from the right arm. The orientation control of the right arm end effector did not match what we experienced in simulation. By lack of time before the challenge we decided to remove skilled grasps, and constrained the robot to preset vertical pick moves.

Final Contender

Our first successful run on the complete hardware system has been captured on camera!

We managed at this point to pick and place with both arms, but things seem a bit off. Foodly was now running through its plans (pick, place, move the conveyor belt), however we noticed it would often attempt to pick a karaage seemingly in mid air. Checking the depth cameras we discovered positions were measured in millimetres–they should have been in metres; another configuration gap with simulation time. Another quick fix here, as well as extra refinements to deal with the wider range of situations with real cameras: We decided to centre-crop images as well as keep only median-depth pixels passed to the vision node. This eliminated all false positives outside the tray frame, where all karaages are situated. The robot became more predictable when choosing its targets.

The Challenge

On the morning of the challenge, as we rehearsed and tried to restore our skilled grasp capabilities, we drew our presentation turn: First spot. And our Foodly first pick and place was a success! Unfortunately it stopped there and would not run its routine from the second bento box coming down the conveyor belt. Five other teams competed along us, and three managed complete runs with their solutions. Congratulations to all the participants, and utmost respect to the teams who completed the challenge!

Simulation and Robotics: Outlook

Robotics engineers know too well that the only certainty in robotics is when the software runs correctly on its target hardware. Before that stage, anything and everything is possible. Our participation in the challenge is another example of this hard lesson.

Development in simulation does not substitute testing and tuning on real hardware. Yet it allowed us to craft a complete solution from scratch in a handful of weeks, with simulation artefacts to conduct further testing and quick experiments. A gap with the real hardware remains, but we see it reducing as simulation tooling like RoboStage and Artefacts mature in the world of robotics.

The ICRA challenge this year allowed us to validate several aspects of our tools, and showed important requirements for closing the gap with reality, notably compute requirement estimation and sensor configuration alignment. We will be working on these extensions over the next few months, and delighted if you are interested in following or getting in touch with us about these endeavours!