Let's compare the manual testing approach traditionally performed within robotics context with a more modern automated approach enabled by the Artefacts platform.

The usual robotics testing life cycle is:

Many of the pains of traditional robotics testing are the reason we are dedicated to developing the Artefacts platform and providing a more seamless, efficient and enjoyable robotics development experience.

In the sections below, we pick up key aspects of each approach to robotics testing, taken from our own experience building robots and the feedback from roboticists and robotics companies we are in discussion with.

Traditional / manual case:

-

Setting up each test requires a succession of manual commands from the developer:

- Stand up the simulation (e.g launch gazebo/turtlesim/others)

- Setup the test parameters (e.g place the robot at the test's start position)

- Start the test (e.g launch ROS nodes, for example to compute rover odometry and send commands to the rover to execute a standard trajectory)

- Start the recording (e.g start a rosbag recorder)

This leads to a multitude of terminal windows open which:

- Leads to high cognitive loads

- creates situations prone to timing/order mistakes (e.g developer realizes at the end of the test that the data recorder failed)

-

Deciding the end-of-test criteria is often done visually by the developer. This means the developer must keep watching the simulation.

This repetitive, time consuming task can be acceptable at the start of development for ‘understanding the system’ but becomes intractable for :

- Tuning algorithm parameters / exploring the design space where many parameters need to be checked

- Regression testing where many tests must be run regularly as new features are implemented

- Simulation environments with a low real time factor where each test takes too much time

-

Comparing results across several tests often lacks a systematic approach.

The developer checks the logs on the terminal, watches the simulation and notes pass / fail or creates ad-hoc plots of metrics:

- Becomes a tedious process

- Requires a lot of context switching

- Leads to forgetting or mis-remembering the result of a previous test (set of parameters) → draw wrong conclusion / iterate development in the wrong direction

Benefits of using Artefacts for each of the above pain points:

-

The Artefacts platform enables orchestrating the entire process in the diagram above via a single command + YAML configuration file. This simplicity :

- removes the cognitive load on the developer

- guarantees timing and proper execution of all steps

- provides repeatability and traceability → the configuration file is version controlled together with the developer's test code.

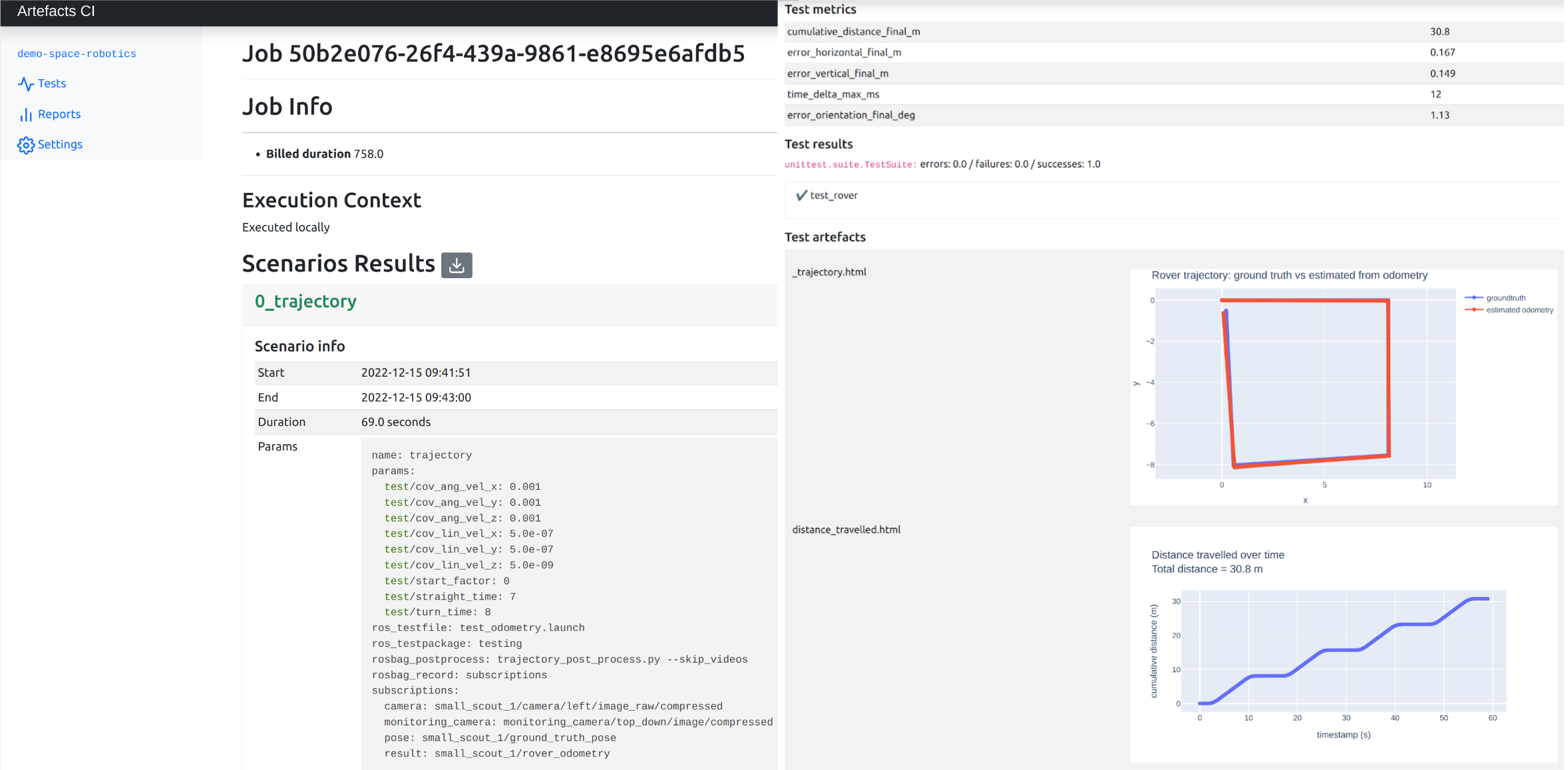

Example

artefacts.yaml:project: demo-space-robotics jobs: rover_trajectory: type: test runtime: simulator: gazebo:11 framework: ros1:0 scenarios: settings: - name: trajectory ros_testpackage: testing ros_testfile: test_odometry.launch subscriptions: pose: small_scout_1/ground_truth_pose result: small_scout_1/rover_odometry camera: small_scout_1/camera/left/image_raw/compressed monitoring_camera: monitoring_camera/top_down/image/compressed rosbag_record: subscriptions rosbag_postprocess: trajectory_post_process.py params: cov_lin_vel_xy: [0.00000005, 0.0000005, 0.000005] cov_lin_vel_z: [0.0000000005, 0.000000005, 0.00000005] -

The one time effort to define (code) the end-of-test criteria unlocks the ability to:

- automatically stop the test / no need to watch the test itself (the developer can grab a coffee instead)

- mainly: launch several tests one after the other without developer intervention (the next morning, the developer can easily check the results of all the tests that ran overnight)

-

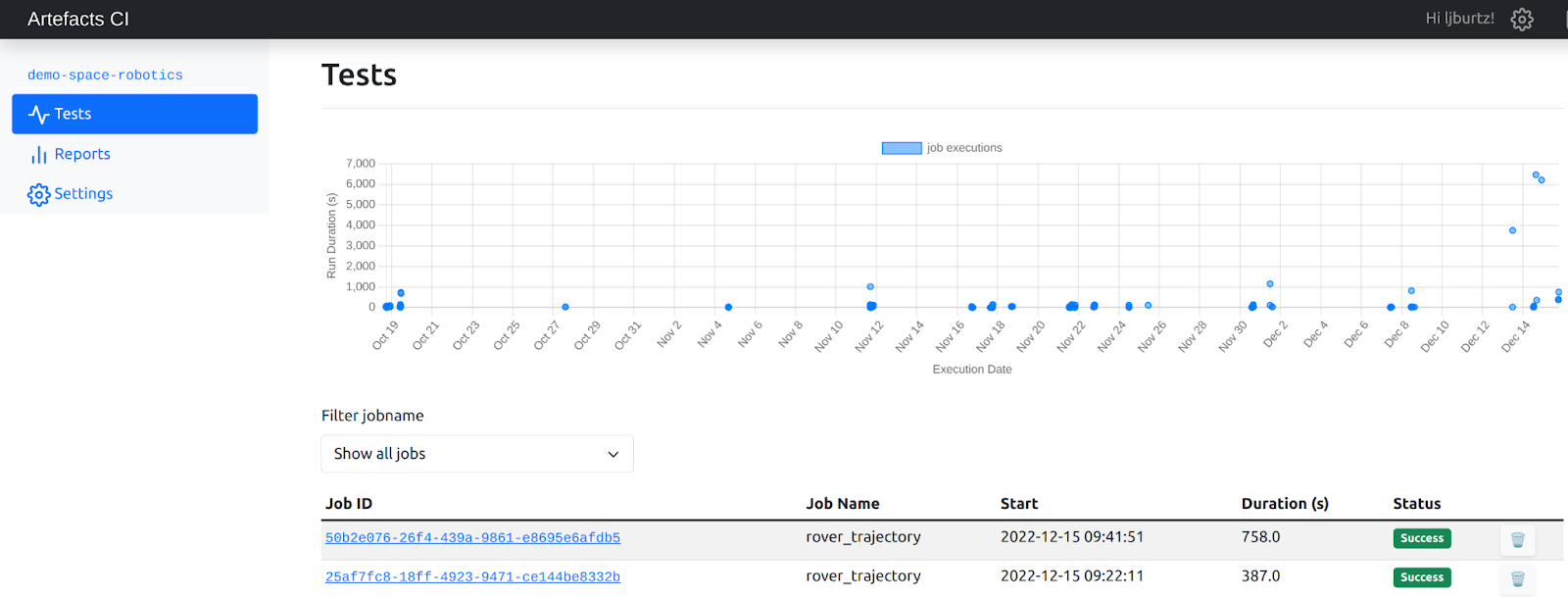

Test results and all produced artifacts are automatically logged (in the cloud) in a central location (the Artefacts Dashboard):

- all data is preserved without effort

- unified interface to compare tests and their various types of artifacts (graphs / videos / scalar metrics)

- the developer can perform a quick look in the Dashboard. Then, as needed, he/she can deep dive into the recorded ROSBags with the help of dedicated tools (Foxglove / Rviz / Plotjuggler). The appropriate tool configs can also be hosted in the cloud and made shareable between team members.

Example use cases for Artefacts:

- Regression testing: make sure future features don't break previous ones! (see how to implement this in a dedicated tutorial).

- Tune PID gains of motor controllers

- Tune a Kalman filter by doing several tests to explore the parameter space

- Repeatability testing: given the same inputs (trajectory/speed/terrain) how much do the outputs/metrics vary? Many robotics and simulation processes are not deterministic (see how to implement this in a dedicated tutorial).

- Evaluate rover localization performance across several trajectories (some trajectories are shorter/longer, other with various craters/hills/obstacles conditions

- Evaluate the performance of the robot tech stack in integration tests

- Parametrize and randomize the simulated environment

- Collect data for creating machine learning datasets

- ... many others! If you can code it, you can automate it.